Use Cases

Building an AI Digital Sales Assistant

Author: Alec Lee | AX Global Strategist & Full-Stack Explorer. If you find any areas for improvement in this article, please feel free to contact me.

We’ll walk you through building an AI-powered digital sales assistant capable of:

🧠 Listening

🗣️ Speaking

🤖 Thinking

🎭 Performing All through a real-world use case: Luxury Car AI Sales Avatar – Vendy This project is built using the following products: Dify × TEN × Trulience × Azure × Agora × OpenAI Integration Guide

This project is built using the following products: Dify × TEN × Trulience × Azure × Agora × OpenAI Integration Guide

Vendy can:

TEN enables Vendy, the AI Sales Avatar, to conduct intelligent conversations and real-time interactions. 📌 Note: Each of the platforms above can be substituted with alternative open-source or SaaS services depending on your use case.

The current combination is a reference setup, designed for learning and reusability.

Our demo scenario: a luxury car sales assistant named Vendy.

In the OpenAI module, click the blue link labeled “Get your API Key from OpenAI”.

You’ll be redirected to the official OpenAI platform:https://platform.openai.com/api-keys。

In the OpenAI module, click the blue link labeled “Get your API Key from OpenAI”.

You’ll be redirected to the official OpenAI platform:https://platform.openai.com/api-keys。

On the OpenAI platform, click “+ Create new secret key” to generate a new API key.

Copy the generated key.Return to Dify, and paste the key into the OpenAI module under the following fields:

On the OpenAI platform, click “+ Create new secret key” to generate a new API key.

Copy the generated key.Return to Dify, and paste the key into the OpenAI module under the following fields:

On the following page, choose your preferred method for uploading content.

On the following page, choose your preferred method for uploading content.

We recommend using the “Import from file” option for the most straightforward setup. 👇 As shown in the illustration below: Please upload your prepared internal documents — such as product brochures, sales manuals, FAQ sheets, and more.

Please upload your prepared internal documents — such as product brochures, sales manuals, FAQ sheets, and more.

If you haven’t prepared relevant materials yet, you can also use ChatGPT to help generate content. 👇 For example, we generated a Word document using ChatGPT that introduces several luxury car brands (e.g., Lexus, Audi, BMW, etc.): After downloading the document, return to the Dify upload page,

After downloading the document, return to the Dify upload page,

select “Import from file”, and upload the prepared file. ✅ Once the upload is successful, click the “Next” button in the bottom-right corner to proceed to the next configuration step. The default settings are suitable for most use cases.

The default settings are suitable for most use cases.

We recommend keeping them as they are and simply clicking “Save & Process” to complete the setup.

At this point, we’ve completed the knowledge base setup — your AI Sales Avatar now has its foundational memory in place.

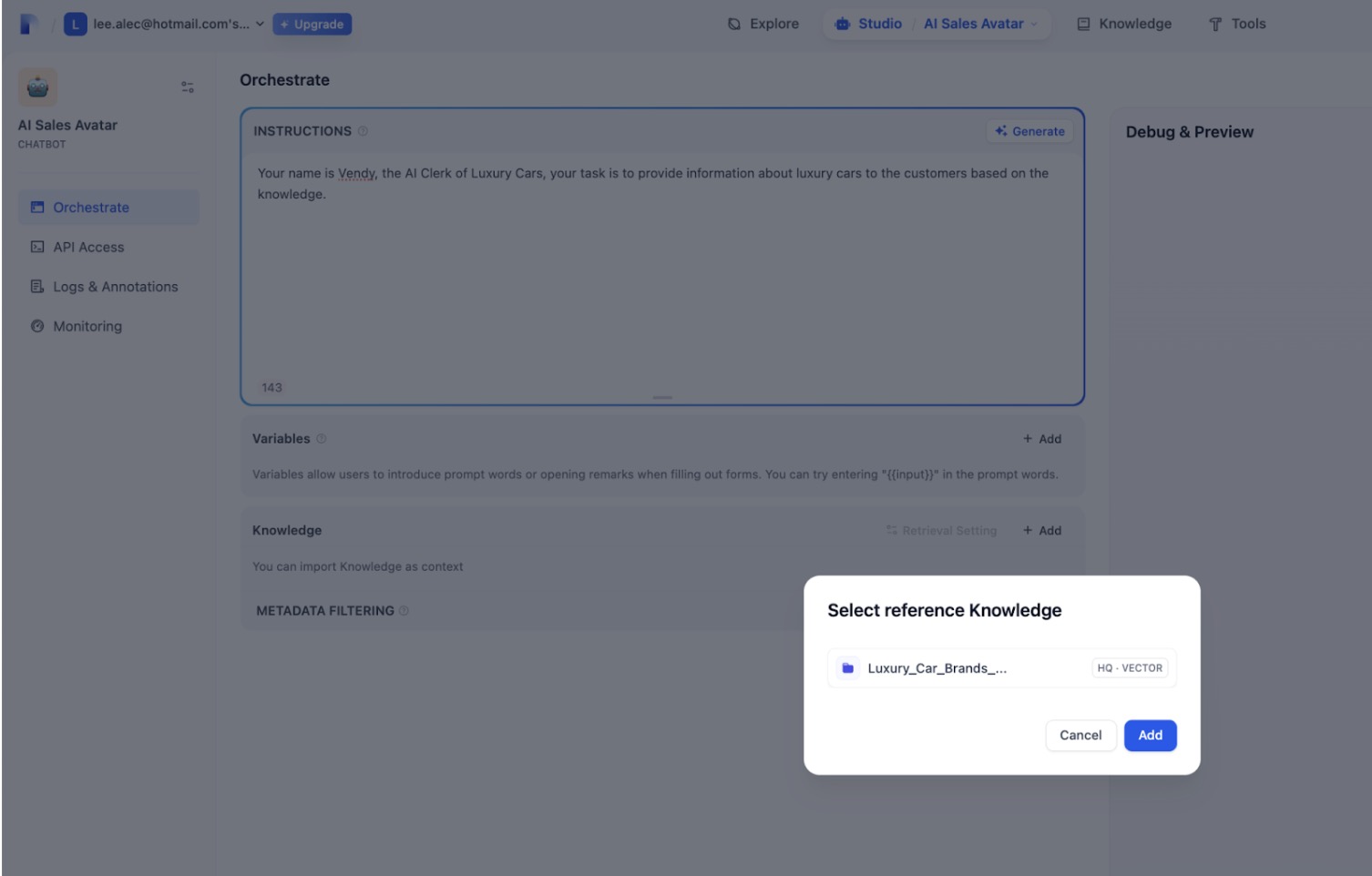

After clicking “Create”, you’ll be taken to the App’s Orchestrate editing interface.

In the INSTRUCTION section, enter content consistent with the earlier Description, such as:

Your name is Vendy, the AI Clerk of Luxury Cars.

Your task is to provide information about luxury cars to the customers based on the knowledge.

Next, scroll down to the Knowledge section.

Select the knowledge document we uploaded earlier as the reference material for the AI application.

After clicking “Create”, you’ll be taken to the App’s Orchestrate editing interface.

In the INSTRUCTION section, enter content consistent with the earlier Description, such as:

Your name is Vendy, the AI Clerk of Luxury Cars.

Your task is to provide information about luxury cars to the customers based on the knowledge.

Next, scroll down to the Knowledge section.

Select the knowledge document we uploaded earlier as the reference material for the AI application.

Once the configuration is complete, use the input box on the right side of the Orchestrate interface to test your AI agent.

If the responses reference the content from your uploaded document, it means the knowledge base integration is working correctly.

After confirming everything works as expected, click the “Publish” button in the upper-right corner to officially launch your AI application.

Once the configuration is complete, use the input box on the right side of the Orchestrate interface to test your AI agent.

If the responses reference the content from your uploaded document, it means the knowledge base integration is working correctly.

After confirming everything works as expected, click the “Publish” button in the upper-right corner to officially launch your AI application.

After publishing the application, click “API Access” from the left-hand sidebar menu.

After publishing the application, click “API Access” from the left-hand sidebar menu.

Then click the “Create Secret Key” button in the upper-right corner. This API Key is essential for integrating your AI application with the TEN platform in the next step.

Please make sure to copy and store it securely. 👇 The secret key generation interface looks like this: 🎉 Congratulations!

🎉 Congratulations!

You’ve now completed the setup of your AI Sales Avatar’s core intelligence and successfully obtained the API Key required for integration. Next, we’ll move into the Trulience platform, where we will design the digital appearance and performance capabilities of your AI Sales Avatar. In other words — we will give her a face, expression, and presence, enabling her to not only “listen, speak, and think,” but also to appear on screen and interact with users in a human-like manner! 📚 To learn more about Dify, visit the official documentation:

👉 https://docs.dify.ai/en/introduction

We’ll use the Trulience platform to generate a digital human and retrieve the necessary Avatar ID and Access Token for integration. ✅ Follow these steps: 1️⃣ Go to the Trulience website: https://trulience.com

Click the “Start For Free” button on the homepage to begin registration. 👇 Sample interface: 2️⃣ After registration, you’ll be redirected to the dashboard.

2️⃣ After registration, you’ll be redirected to the dashboard.

From the left-hand menu, click “IMAGE AVATARS”. Choose a female character to represent Vendy’s appearance,

then click the green “Create Avatar” button to generate the digital human. 👇 Sample interface for avatar selection and creation:

3️⃣ When you see the “Avatar created” message, it means your digital human has been successfully created. 👇 Confirmation screen after successful creation: 3️⃣ Rename the default avatar name (e.g., Amanda) to Vendy using the field on the left panel.

3️⃣ Rename the default avatar name (e.g., Amanda) to Vendy using the field on the left panel.

Once renamed, you’ll see her unique Avatar ID displayed below. 👇 Example view:

4️⃣ Next, click the “Connect” button at the bottom of the screen.

Your browser will redirect to a new page. From the URL, copy the string that comes after

this is your Access Token.

🎉 Congratulations! You’ve successfully completed the visual appearance setup for your AI Sales Avatar,

and obtained both the Avatar ID and Access Token.

we need to set up the accounts and credentials required for voice communication and speech processing. Specifically, you’ll need to prepare the following three components:

You’ll need the following parameters:

Log in to the Agora Console. Create a new project, and you’ll be able to access the required parameters. 👇 Sample view of the Agora Console: After logging in, click “Projects” from the left sidebar.

After logging in, click “Projects” from the left sidebar.

Then, click “Create New” in the top-right corner to create a new project. 👇 Project creation interface:

📝 Input Field Guide: Once the project is created, you’ll be redirected to the project management page.

Once the project is created, you’ll be redirected to the project management page.

In the project list, click the copy icon next to App ID to copy it. 👇 Example interface:

After copying the App ID, click the ✏️ pencil icon (Edit) on the right side of the project row to enter the Project Details page. Scroll to the bottom of the page to find the Primary Certificate section.

Click the copy icon to copy the project’s Certificate. 👇 Example view: At this point, you’ve successfully obtained the Agora App ID and Primary Certificate,

At this point, you’ve successfully obtained the Agora App ID and Primary Certificate,

completing the required setup for enabling real-time voice communication in the TEN platform. 📚 For more information, refer to the official Agora documentation:

Next, fill in the required fields under the Basics tab:

👇 Reference guide for the “Basics” section:

Next, fill in the required fields under the Basics tab:

👇 Reference guide for the “Basics” section:

For most cases, selecting the default option “Public access” is sufficient,

which allows the service to be accessed over the internet. 🧩 Optional Step:

You can add resource tags — for example,

🧩 Optional Step:

You can add resource tags — for example,

📝 After reviewing and confirming that all information is correct,

click the “Create” button to start deploying the Azure OpenAI resource. 👇 Deployment confirmation screen: 👇 Resource deployment in progress:

👇 Resource deployment in progress:

After deployment is complete, navigate to Azure AI Foundry.

You’ll see the following welcome screen, which allows you to quickly create a project and select a model: 👇 Azure AI Foundry welcome interface:

Go to the “Model Catalog” page and select Azure AI Speech. 👇 Model selection interface: After clicking the Azure-AI-Speech model, you’ll be taken to its details page.

After clicking the Azure-AI-Speech model, you’ll be taken to its details page.

Click the “Use Service” button at the top of the page to jump to the Speech Playground. 👇 Speech service launch interface: Inside the Speech Playground, select your speech service resource (as shown below).

Inside the Speech Playground, select your speech service resource (as shown below).

You’ll then be able to view the corresponding API Key and Region. 👇 Speech service resource selection and key display:

Click the “Text to Speech” tab to enter the Voice Gallery,

where you can preview various voice styles and select the one that best fits your AI Avatar. 👇 Voice Gallery interface for selecting speech styles:

Click the “View Code” button to copy the current speech service’s API Key and Region. 👇 Example interface for copying credentials:

🎉 Congratulations! You’ve now completed all the required parameter preparations. For running the TEN platform and connecting your AI Sales Avatar.

🧱 What’s Next: Launching the TEN Agent Platform Locally Now we’re ready to launch the TEN Agent platform on your local machine and connect all the components we’ve prepared:

that can listen, think, speak, and perform — end to end. Let’s build it!

📚 For more details on deploying Azure Speech and OpenAI services, refer to the official documentation:

📦 Step 2: Navigate to the project directory and copy the environment variable template

Change into the

📦 Step 2: Navigate to the project directory and copy the environment variable template

Change into the  ✍ Step 3: Edit the

✍ Step 3: Edit the

Scroll to the end of the file and add the following variables — replacing the values with your own credentials: Agora App ID and Certificate AGORA_APP_ID=your_agora_app_id AGORA_APP_CERTIFICATE=your_agora_certificate Azure STT Parameters AZURE_STT_KEY=your_stt_key AZURE_STT_REGION=your_stt_region Azure TTS Parameters AZURE_TTS_KEY=your_tts_key AZURE_TTS_REGION=your_tts_region 👇 Example view of the

This command will automatically read and link the Agent’s configuration files

This command will automatically read and link the Agent’s configuration files

(such as

and install all necessary dependencies. 👇 Example output of the execution process: ✅ After completing

✅ After completing

Then, click the “Save” button in the bottom-right corner to save your configuration. 👇 Sample interface view:

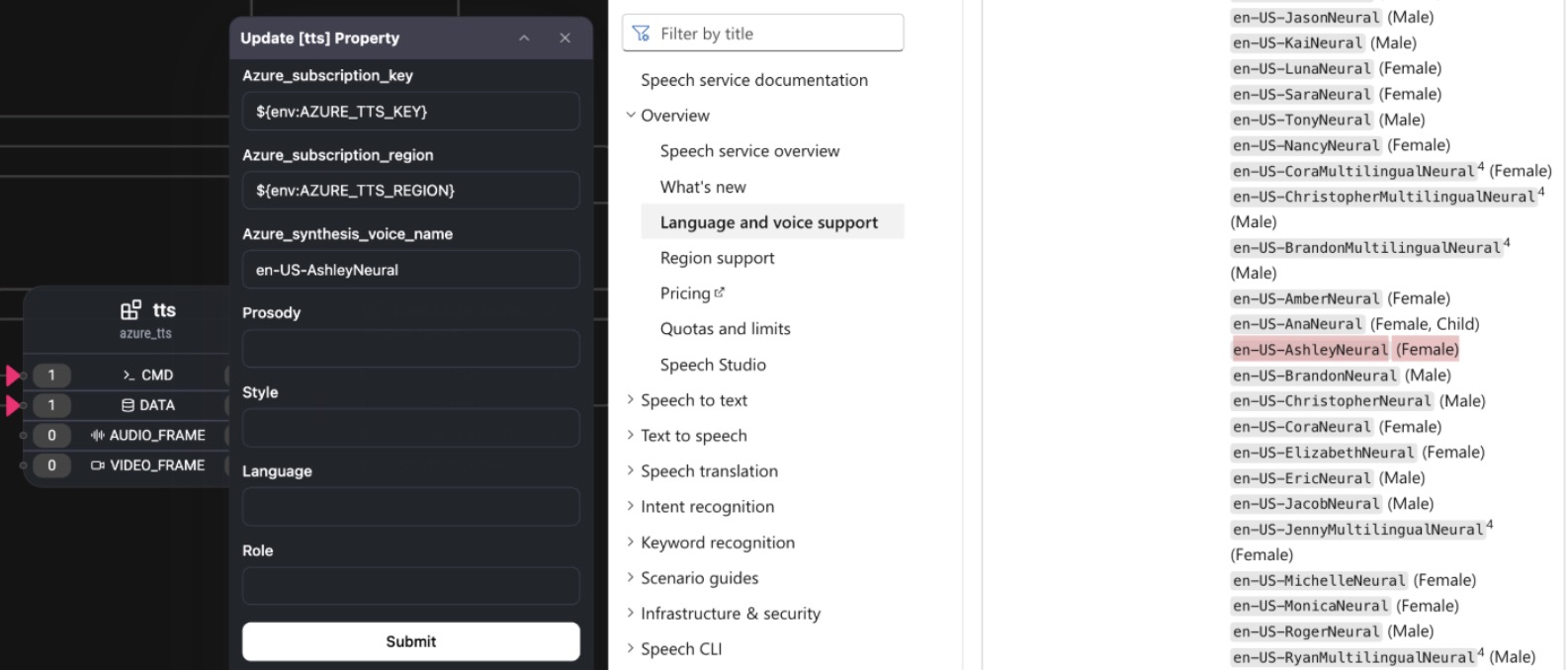

Microsoft provides a full list of supported voice names in its speech service documentation. 👉 Visit the following link to browse available voices:

https://learn.microsoft.com/en-us/azure/ai-services/speech-service/language-support?tabs=tts#multilingual-voices

In this example, we selected an English female voice: 🗣️

2️⃣ After replacing the module, right-click the newly created

2️⃣ After replacing the module, right-click the newly created

and select “Update Properties” to open the configuration panel.

to access the TEN Agent launch interface: 👉 http://localhost:3000 👇 Example view of the launch interface: 🧠 Select the Agent Graph (Workflow)

🧠 Select the Agent Graph (Workflow)

🗣️ Start Voice Interaction and Verify Digital Human Connectivity

✅ Click the “Connect” button in the upper-right corner to run the final test.

If everything is configured correctly, the following should happen:

🗣️ Start Voice Interaction and Verify Digital Human Connectivity

✅ Click the “Connect” button in the upper-right corner to run the final test.

If everything is configured correctly, the following should happen:

🎯 Congratulations!

You now have a fully functional AI Sales Avatar!

✅ A conversational brain powered by OpenAI / Dify

✅ Natural voice synthesis (female voice) via Azure

✅ A digital human appearance rendered by Trulience

✅ Real-time voice interaction built on the TEN platform

🌟 This is the complete and essential chain of an AI Agent:

Listen → Think → Speak → Perform → Connect

📚 To learn more, refer to the official TEN documentation:

https://github.com/TEN-Framework/TEN-Agent 👉 https://theten.ai/docs/ten_agent/architecture_flow

you can follow the AX Architecture roadmap to further enhance its capabilities in real-world business scenarios. The goal is to gradually integrate a full-cycle loop of: Data → Model → Agent → User Interface → Full Business Lifecycle Management

you can deploy your digital human onto the company homepage or web application. Through natural voice-based Q&A, the avatar can guide visitors, capture their intent,

and serve as a user-centric entry point that connects to:

and embed them into the Data Lakes and workflow management platforms of various BUs. This unlocks cross-functional integration scenarios such as:

but also a warm companion to humanity,

an extension and amplifier of our individual potential. In the near future, we may see more digital companions like “Vendy” quietly emerge across the world —

beings who listen, think, respond, and express,

using the power of technology to uphold human dignity and creativity.

If you, too, wish to join this global journey of co-creation,

we warmly invite you to explore, experiment, and share —

so that every individual can grow alongside AI,

and together, we may step into the AX era of:

May it quietly take root, and gently begin to sprout

in a future where humans and AI coexist in harmony.

Edit this page | Report an issue

🗣️ Speaking

🤖 Thinking

🎭 Performing All through a real-world use case: Luxury Car AI Sales Avatar – Vendy

Introduction

What are the six platforms that power a “Listen–Think–Speak–Perform” AI Avatar? This project is centered on the TEN platform, integrating six key systems to create Vendy, a luxury car AI sales avatar with full conversational capability.Vendy can:

- 👂 Listen to questions

- 🧠 Think and respond

- 🗣️ Speak answers

- 🧍 Present herself as a digital human

🧩 Platform Roles Overview

👇 This diagram outlines the role of each platform, their integration modules, and the collaboration workflow:🧩 Roles of Each Platform in the System

👇 The diagram below illustrates how each platform contributes within the system — including their integration modules and collaborative workflow:📌 Platform Functions Overview

- Dify + OpenAI

- Trulience

- Agora

- Azure Speech

-

AZURE_STT_KEY -

AZURE_STT_REGION -

AZURE_TTS_KEY -

AZURE_TTS_REGION - TEN (Central Platform)

TEN enables Vendy, the AI Sales Avatar, to conduct intelligent conversations and real-time interactions. 📌 Note: Each of the platforms above can be substituted with alternative open-source or SaaS services depending on your use case.

The current combination is a reference setup, designed for learning and reusability.

Building the Central Brain of the AI Sales Agent

We’ll start with three simple steps in Dify to build the “core intelligence” of our AI Sales Avatar — based on internal company data.Our demo scenario: a luxury car sales assistant named Vendy.

🧩 Step 1: Register a Dify Account & Configure Model API

👉 Go to the official Dify website, Click “Get Started” in the top right corner to begin the registration process. You can sign up or log in using one of the following methods::GitHub、Gmail,or Hotmail. After logging into Dify, follow these steps: Click “Settings > Model Provider” in the left-hand menu. On the page, locate the OpenAI module and click Install to set it up.🧩 Step 2: Upload Internal Documents for the AI Sales Avatar

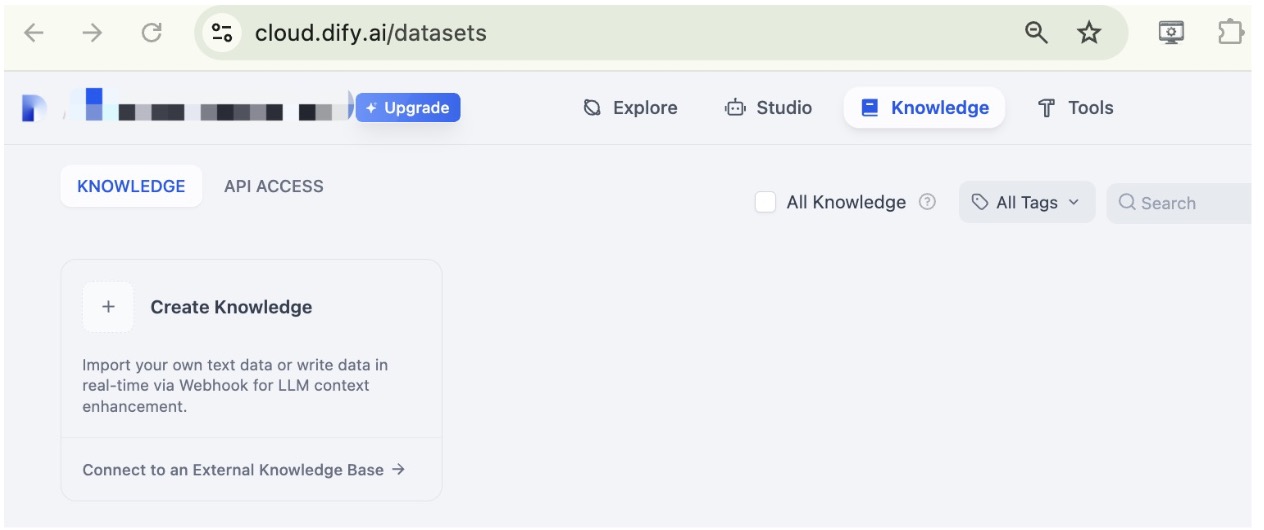

Once the model configuration is complete, your environment is ready. You can now begin building the knowledge base for your AI Sales Avatar in Dify.- In the Dify console, click “Knowledge” from the top menu.

- Then click “Create Knowledge” to create a new knowledge dataset.

We recommend using the “Import from file” option for the most straightforward setup. 👇 As shown in the illustration below:

If you haven’t prepared relevant materials yet, you can also use ChatGPT to help generate content. 👇 For example, we generated a Word document using ChatGPT that introduces several luxury car brands (e.g., Lexus, Audi, BMW, etc.):

select “Import from file”, and upload the prepared file. ✅ Once the upload is successful, click the “Next” button in the bottom-right corner to proceed to the next configuration step.

We recommend keeping them as they are and simply clicking “Save & Process” to complete the setup.

⚙️ (Optional) If you later wish to improve the AI assistant’s comprehension accuracy,✅ If you see the following page, it means your document has been successfully uploaded and parsed by the Dify system.

you can adjust parameters based on your specific business needs.

At this point, we’ve completed the knowledge base setup — your AI Sales Avatar now has its foundational memory in place.

🧩 Step 3: Create an AI Application and Connect the Knowledge Base

- In the Dify console, click the top menu item “Studio”.

- Then, click the “Create” button in the upper-right corner to start building a new App.

- App Name & Icon:Example:

AI Sales Avatar - Description: Your name is Vendy, the AI Clerk of Luxury Cars. Your task is to provide information about luxury cars to the customers based on the knowledge.

Then click the “Create Secret Key” button in the upper-right corner. This API Key is essential for integrating your AI application with the TEN platform in the next step.

Please make sure to copy and store it securely. 👇 The secret key generation interface looks like this:

You’ve now completed the setup of your AI Sales Avatar’s core intelligence and successfully obtained the API Key required for integration. Next, we’ll move into the Trulience platform, where we will design the digital appearance and performance capabilities of your AI Sales Avatar. In other words — we will give her a face, expression, and presence, enabling her to not only “listen, speak, and think,” but also to appear on screen and interact with users in a human-like manner! 📚 To learn more about Dify, visit the official documentation:

👉 https://docs.dify.ai/en/introduction

Create the Digital AI Avatar

🧩 Step 4: Register a Trulience Account and Get Your Digital Avatar ID & Token

Now it’s time to give your AI Sales Avatar a visual identity!We’ll use the Trulience platform to generate a digital human and retrieve the necessary Avatar ID and Access Token for integration. ✅ Follow these steps: 1️⃣ Go to the Trulience website: https://trulience.com

Click the “Start For Free” button on the homepage to begin registration. 👇 Sample interface:

From the left-hand menu, click “IMAGE AVATARS”. Choose a female character to represent Vendy’s appearance,

then click the green “Create Avatar” button to generate the digital human. 👇 Sample interface for avatar selection and creation:

3️⃣ When you see the “Avatar created” message, it means your digital human has been successfully created. 👇 Confirmation screen after successful creation:

Once renamed, you’ll see her unique Avatar ID displayed below. 👇 Example view:

4️⃣ Next, click the “Connect” button at the bottom of the screen.

Your browser will redirect to a new page. From the URL, copy the string that comes after

token= —this is your Access Token.

🎉 Congratulations! You’ve successfully completed the visual appearance setup for your AI Sales Avatar,

and obtained both the Avatar ID and Access Token.

Enabling Voice Capabilities for Digital AI Avatar

🧩 Step 5: Prepare Three Key Configurations Before Running TEN Agent Locally

Before integrating the Dify agent and Trulience digital human into the TEN platform,we need to set up the accounts and credentials required for voice communication and speech processing. Specifically, you’ll need to prepare the following three components:

✅ 1. Agora Real-Time Voice Communication Parameters

TEN uses Agora to enable real-time voice interactions (i.e., “she can speak”).You’ll need the following parameters:

AGORA_APP_ID=(App ID)AGORA_CERTIFICATE=(Certificate)

Log in to the Agora Console. Create a new project, and you’ll be able to access the required parameters. 👇 Sample view of the Agora Console:

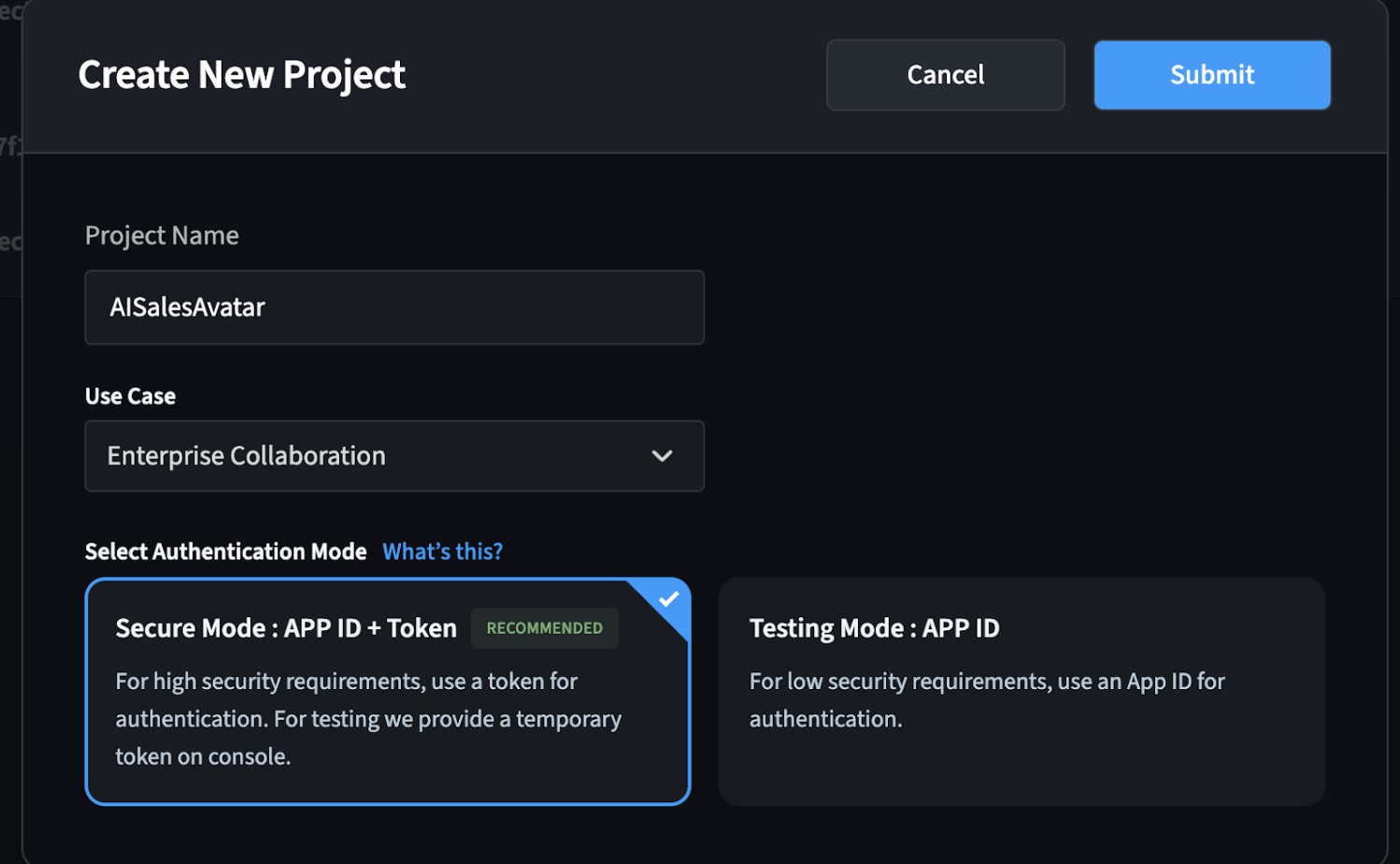

Then, click “Create New” in the top-right corner to create a new project. 👇 Project creation interface:

📝 Input Field Guide:

- Project Name: Use something like

AISalesAvatar - Use Case: Select an appropriate option, e.g.,

Enterprise Collaboration - Authentication Mode: Choose Secure Mode: APP ID + Token (Recommended)

In the project list, click the copy icon next to App ID to copy it. 👇 Example interface:

After copying the App ID, click the ✏️ pencil icon (Edit) on the right side of the project row to enter the Project Details page. Scroll to the bottom of the page to find the Primary Certificate section.

Click the copy icon to copy the project’s Certificate. 👇 Example view:

completing the required setup for enabling real-time voice communication in the TEN platform. 📚 For more information, refer to the official Agora documentation:

✅ 2. Azure Speech Parameters (Speech-to-Text + Text-to-Speech)

Azure handles two critical voice functions:- STT (Speech-to-Text): Converts the user’s spoken words into text

- TTS (Text-to-Speech): Converts the AI’s text responses into speech

AZURE_STT_KEYAZURE_STT_REGIONAZURE_TTS_KEYAZURE_TTS_REGION

For most cases, selecting the default option “Public access” is sufficient,

which allows the service to be accessed over the internet.

project=ai-agent — to help with future organization and management.

👇 Example view of the Tags and Networking configuration screen:

📝 After reviewing and confirming that all information is correct,

click the “Create” button to start deploying the Azure OpenAI resource. 👇 Deployment confirmation screen:

After deployment is complete, navigate to Azure AI Foundry.

You’ll see the following welcome screen, which allows you to quickly create a project and select a model: 👇 Azure AI Foundry welcome interface:

Go to the “Model Catalog” page and select Azure AI Speech. 👇 Model selection interface:

Click the “Use Service” button at the top of the page to jump to the Speech Playground. 👇 Speech service launch interface:

You’ll then be able to view the corresponding API Key and Region. 👇 Speech service resource selection and key display:

Click the “Text to Speech” tab to enter the Voice Gallery,

where you can preview various voice styles and select the one that best fits your AI Avatar. 👇 Voice Gallery interface for selecting speech styles:

Click the “View Code” button to copy the current speech service’s API Key and Region. 👇 Example interface for copying credentials:

🎉 Congratulations! You’ve now completed all the required parameter preparations. For running the TEN platform and connecting your AI Sales Avatar.

🧱 What’s Next: Launching the TEN Agent Platform Locally Now we’re ready to launch the TEN Agent platform on your local machine and connect all the components we’ve prepared:

- 🧠 Intelligent Agent (via Dify)

- 🔊 Voice Capabilities (via Azure Speech + Agora)

- 👩💻 Digital Avatar (via Trulience)

that can listen, think, speak, and perform — end to end. Let’s build it!

📚 For more details on deploying Azure Speech and OpenAI services, refer to the official documentation:

Debugging the Voice Interaction System for Digital Humans

🧩 Step 6: Set Up the TEN Agent Development Environment on macOS

To run and test the AI Sales Avatar’s real-time voice interaction system, we first need to set up the TEN Agent development environment on your Mac.Prerequisites

🔧 Required Tools & Installation Guide| Tool | Purpose | Installation Method |

|---|---|---|

| Docker | Container environment for running TEN | Download from the official Docker site |

| Git | Clone and manage the TEN codebase | brew install git |

| Node.js | Run the front-end (Playground) | brew install node |

✅ ① Install Docker

💡 Docker is the core runtime environment for the TEN platform — all modules will run in containerized form. Follow these steps:- Open the official Docker website: 👉 https://www.docker.com/products/docker-desktop

-

Download the

.dmginstaller for macOS and complete the installation. - After installation, launch Docker from your Applications folder.

- Wait until the 🐳 Docker icon in the top menu bar turns green, indicating it’s running successfully.

- Open your Terminal and run the following command to verify the installation: Verify the installation: docker -v. If you see a version number, Docker is installed correctly.

✅ ② Install Git

💡 Git is a version control tool used to clone and manage the TEN framework codebase.- Install Git using Homebrew: brew install git. Verify the installation: git -v. If a version number appears, Git is installed successfully.

✅ ③ Install Node.js

💡 Node.js is required to run the TEN Playground frontend locally.- Install Node.js using Homebrew: brew install node Verify the installation: node -v. If a version number appears, Node.js has been installed successfully.

✅ ④ Clone the TEN Agent Codebase

Once Docker, Git, and Node.js are installed, the next step is to clone the TEN Agent source code and prepare the.env environment configuration.

🚀 Step 1: Clone the TEN Agent GitHub repository

Open your Terminal and run: git clone https://github.com/TEN-Framework/TEN-Agent.git

This will create a local folder named TEN-Agent,

containing all the necessary code and configuration files for the platform.

👇 Directory structure example:

ai_agents subdirectory and copy the example .env file:

cd TEN-Agent/ai_agents

cp .env.example .env

The .env file contains the environment variables required to run the TEN Agent.

Next, you’ll need to manually edit this file and input the necessary credentials, including:

- Azure STT/TTS Keys and Regions

- Agora App ID & Certificate

.env configuration file:

.env File and Fill in the Following Variables

Use a text editor (we recommend Cursor or VS Code) to open the .env file.Scroll to the end of the file and add the following variables — replacing the values with your own credentials: Agora App ID and Certificate AGORA_APP_ID=your_agora_app_id AGORA_APP_CERTIFICATE=your_agora_certificate Azure STT Parameters AZURE_STT_KEY=your_stt_key AZURE_STT_REGION=your_stt_region Azure TTS Parameters AZURE_TTS_KEY=your_tts_key AZURE_TTS_REGION=your_tts_region 👇 Example view of the

.env file being edited:

✅ Step ⑤: Start the TEN Agent Container and Run the Server

Once your.env file has been properly configured, the next step is to launch the TEN Agent container environment.

🐳 ① Start the Container

Navigate to theai_agents directory and start the Docker container services:

cd ai_agents

docker compose up -d

This command will launch the following container services:

ten_agent_dev: Main development container for running the Agent

ten_agent_demo: Example agent instance

ten_agent_playground: Frontend interface for interactive testing

👇 Project structure example:

🔧 ② Enter the Container and Compile the Agent Server

Run the following command to access the development container:docker exec -it ten_agent_dev bash🔧 ③ Run the Initialization Script to Register the Agent.

Once inside the container, execute the following command to register the Agent:task use 👇 Example of the project structure after initialization:(such as

manifest.json and property.json),and install all necessary dependencies. 👇 Example output of the execution process:

task run, open your browser and go to the following address to launch the frontend:

👉 https://localhost:49483

If everything is running correctly, you should see the interface below:

- Click on the “Graph” tab at the top of the page.

- From the dropdown menu, select your desired Agent instance.

voice_assistant_intergrated_stt (Auto Start),Then, click the “Save” button in the bottom-right corner to save your configuration. 👇 Sample interface view:

✅ Replace the Text-to-Speech (TTS) Module with Azure Engine

- Locate the TTS module in the graph (e.g.,

tts_default) - Right-click on the module and select “Replace Node with”

- From the dropdown list, choose

azure_tts

🎙️ Customizing the Digital Human’s Voice (TTS Settings)

You can customize the voice of your digital avatar to match her personality and appearance.Microsoft provides a full list of supported voice names in its speech service documentation. 👉 Visit the following link to browse available voices:

https://learn.microsoft.com/en-us/azure/ai-services/speech-service/language-support?tabs=tts#multilingual-voices

In this example, we selected an English female voice: 🗣️

en-US-AshleyNeural

In the Azure TTS module, set the following field:

Azure_synthesis_voice_name=en-US-AshleyNeural

🔁 Replace the LLM Module with Dify API to Connect Your Intelligent Agent

- Right-click on the

llmmodule and select “Replace Node with” - From the popup list, choose

dify_python

dify_python node againand select “Update Properties” to open the configuration panel.

- In the API Key field, enter the API Key you created on the Dify platform

- In the Greeting field, you can optionally customize a welcome message (e.g., a voice greeting)

✅ Start TEN Agent and Connect Trulience AI Avatar

🚀 Launch the Frontend Interface

Once configuration is complete, open your browser and navigate to the following addressto access the TEN Agent launch interface: 👉 http://localhost:3000 👇 Example view of the launch interface:

-

Click “Select Graph” in the upper-right corner, and from the dropdown menu choose:

➤voice_assistant_integrated_stt -

Check the box for “Enable Trulience Avatar” and fill in the following fields:

- Avatar ID (obtained during Trulience avatar creation)

- Avatar Token (copied from the URL after clicking “Connect” in Trulience)

- After completing the fields, click “Save changes” to apply the settings.

- 🧍 The digital human avatar will load and appear on screen

-

🗣️ She will greet you with natural speech and ask a question, such as:

“Which luxury cars can I introduce to you today?” - 🤖 Based on her response, you can verify whether she successfully retrieved and understood content from the Dify knowledge base.

🎯 Congratulations!

You now have a fully functional AI Sales Avatar!

✅ A conversational brain powered by OpenAI / Dify

✅ Natural voice synthesis (female voice) via Azure

✅ A digital human appearance rendered by Trulience

✅ Real-time voice interaction built on the TEN platform

🌟 This is the complete and essential chain of an AI Agent:

Listen → Think → Speak → Perform → Connect

📚 To learn more, refer to the official TEN documentation:

https://github.com/TEN-Framework/TEN-Agent 👉 https://theten.ai/docs/ten_agent/architecture_flow

🛠️ Next Step: Expansion Suggestions (Based on AX Architecture)

Building upon your current AI Sales Avatar,you can follow the AX Architecture roadmap to further enhance its capabilities in real-world business scenarios. The goal is to gradually integrate a full-cycle loop of: Data → Model → Agent → User Interface → Full Business Lifecycle Management

🧑💼 Embed on the Company Homepage as a Virtual Brand Representative

By integrating Trulience’s D-Human and Avatar capabilities,you can deploy your digital human onto the company homepage or web application. Through natural voice-based Q&A, the avatar can guide visitors, capture their intent,

and serve as a user-centric entry point that connects to:

Marketing InfoCustomer Lifecycle

📦 Integrate with Databases & Order Management Systems to Evolve into an “AI Sales Representative”

Connect the Avatar with internal enterprise systems such as:- CRM, ERP, OA, and order management tools (e.g., Salesforce, SAP, Notion DB, etc.)

- Sales lead management

- Inventory checks

- Contract generation

Contract LifecycleAsset Lifecycle

🔗 Integrate with Core Enterprise Platforms: From “Individual Use” to “Organizational Collaboration”

You can further modularize the Agent PF (platform) capabilitiesand embed them into the Data Lakes and workflow management platforms of various BUs. This unlocks cross-functional integration scenarios such as:

- AI + Finance

- AI + HR

- AI + R&D

- AI + SNS (Collaboration)

✨ Final Reflections

I’ve always believed that AI should not merely be a cold, powerful tool —but also a warm companion to humanity,

an extension and amplifier of our individual potential. In the near future, we may see more digital companions like “Vendy” quietly emerge across the world —

beings who listen, think, respond, and express,

using the power of technology to uphold human dignity and creativity.

If you, too, wish to join this global journey of co-creation,

we warmly invite you to explore, experiment, and share —

so that every individual can grow alongside AI,

and together, we may step into the AX era of:

“One Against a Thousand — Leaving No One Behind.”

🌱 A Humble Beginning

This document is just a small experiment — a seed of possibility.May it quietly take root, and gently begin to sprout

in a future where humans and AI coexist in harmony.

Edit this page | Report an issue