插件工作原理

- 所有者和管理员

- 编辑者和成员

为整个工作空间安装、配置和移除插件

安装插件

应用市场

官方和合作伙伴插件,经过测试和维护

GitHub

使用 URL + 版本从任何公共仓库安装

本地上传

用于私有或内部插件的自定义 .zip 包

插件的真正含义

将插件视为 Dify 与外部世界之间的桥梁:模型提供商

Dify 中的每个 LLM(OpenAI、Anthropic 等)实际上都是一个插件

工具和函数

API 调用、数据处理、计算——全部基于插件

自定义端点

将你的 Dify 应用公开为外部系统可以调用的 API

反向调用

插件可以回调 Dify 来使用模型、工具或工作流

工作空间插件设置

在工作空间设置中控制插件权限:安装权限

安装权限

所有人 - 任何成员都可以安装插件

仅管理员 - 只有工作空间管理员可以安装(推荐)

调试访问

调试访问

所有人 - 所有成员都可以调试插件问题

仅管理员 - 将调试限制为管理员

自动更新

自动更新

选择更新策略(仅安全更新与所有更新)并指定要包含或排除的插件

插件安装限制

仅限企业版

- 插件 → 探索市场中的”安装插件”下拉菜单可能显示有限选项

- 安装确认对话框将指示插件是否被策略阻止

- 导入带有插件的应用(DSL 文件)时,你会看到关于受限插件的通知

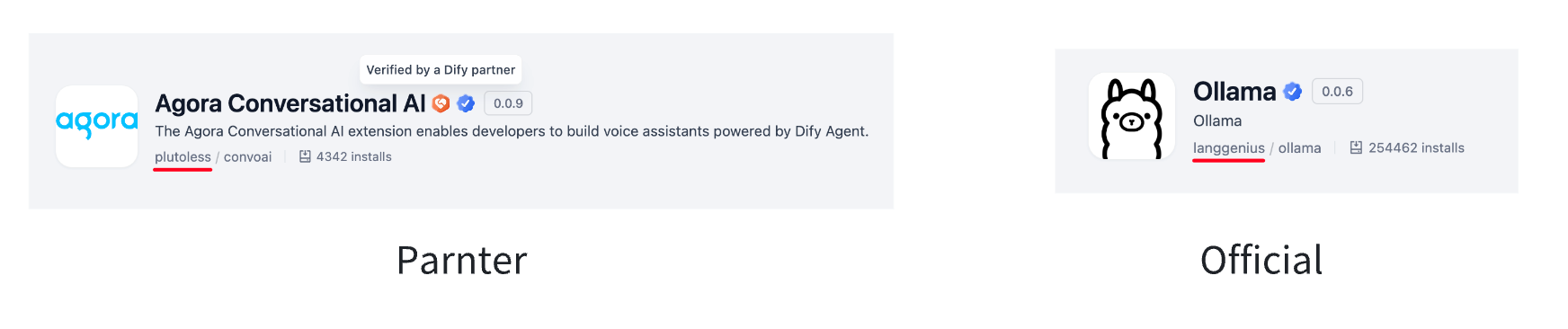

查找这些徽章以识别插件类型——你的工作空间可能只允许基于管理员设置的某些类型。

查找这些徽章以识别插件类型——你的工作空间可能只允许基于管理员设置的某些类型。

如果你无法安装所需的插件,请联系你的工作空间管理员。他们控制允许哪些插件来源(市场、GitHub、本地文件)和类型(官方、合作伙伴、第三方)。

构建自定义插件

当你需要自定义功能时,使用 Dify 的 SDK 开发插件:- 从设置 → 插件 → 调试获取调试密钥

- 在本地构建和测试你的插件

- 打包为包含清单和依赖项的 .zip 文件

- 私下分发或发布到市场